AI Doesn't Have To Be a Cult

Content/Possible trigger warning: existentialism, misogyny, cult behaviors/survival.

6/26/2025 Update: Slight clarification to #8 and #9.

“A big secret is that you can bend the world to your will a surprising percentage of the time — most people don’t even try,” he wrote on his blog. “The most successful founders do not set out to create companies. They are on a mission to create something closer to a religion, and at some point it turns out that forming a company is the easiest way to do so.” - Sam Altman, CEO of OpenAI

Excerpt from Bill Wurtz's history of the entire world, i guess: "You Could Make a Religion Out of Th- no don't"

There's been a saying that "cults + time = religion", but that equation doesn't tell the entire story on the difference between cults and religion. Both words can imply a worldview, but both don't always equate to something that involves coercive control, and/or a key leader in the hierarchy that doesn't get remotely questioned.

Here's a criteria checklist for cults. Some of this could easily be applied to the hype around AI, and AI-first mentality.

#1 "The group is focused on a living leader to whom members seem to display excessively zealous, unquestioning commitment."

Maybe the word "AI" alone isn't a group, but the companies that revolve around it are. I'm hoping not all of them have "excessively zealous, unquestioning commitment" but I don't doubt some of them do at this point. You can't talk about the state of the creative industries without talking about things like Midjourney anymore. You can't talk about coding as a job without making a mention of AI tools that are supposed to help you, like Claude or GitHub Copilot.

#2 "The group is preoccupied with bringing in new members."

A.k.a. "AI-first", which almost destroyed Duolingo to the tune of seven billion dollars and its online social media presence.

#3 "The group is preoccupied with making money"

America is already money-obsessed, and unsurprisingly, its people are pressured to be - but at what cost? To what kind of disruption?

Try to imagine asking for 40 billion dollars as a normal person, or raising 6 billion for a chatbot that insists that white South Africans are going through a genocide and will "undress" photos of women.

Anyone else asking for that kind of money would be considered delusional at worst and cash-poor at best, but not if you're a head of an AI company.

#4 "Questioning, doubt, and dissent are discouraged or even punished."

We seem to be trading our data for the erosion of privacy. This interview basically sums it up.

#5 "Mind-numbing techniques (such as meditation, chanting, speaking in tongues, denunciation sessions, debilitating work routines) are used to suppress doubts about the group and its leader(s)."

Maybe "mind-numbing techniques" doesn't exactly apply here, but maybe there's something to be said about "debilitating work routines". Mturk or Mechanical Turk comes to mind. Without it, ImageNet would not have worked. There's a paradox of AI replacing work, but to do that first, it needs to rely on the work of humans, to label data correctly, not free of exploitation.

#6 "The leadership dictates sometimes in great detail how members should think, act, and feel (for example: members must get permission from leaders to date, change jobs, get married; leaders may prescribe what types of clothes to wear, where to live, how to discipline children, and so forth)."

Maybe we haven't gone to Mormon undergarments level of this, (yet), and speaking as someone who was baptized as one as a child, I hope we don't...

... Oh wait. There's this. Hopefully the meditation activity will make up for the no-yawning rule and we haven't completely butchered a generation of kids.

#7 "The group is elitist, claiming a special, exalted status for itself, its leader(s), and members (for example: the leader is considered the Messiah or an avatar; the group and/or the leader has a special mission to save humanity)."

I'm not feeling that AI has, or is going to "save humanity", as repeatedly promised. I don't need a robot to tell me if breakfast is ready, especially when I'm the only one making it myself. My toaster also doesn't need to be connected to WiFi... It's a damn toaster.

Even AI in healthcare has its own failures.

#8 "The group has a polarized us-versus-them mentality, which causes conflict with the wider society."

#9 "The group’s leader is not accountable to any authorities (as are, for example, military commanders and ministers, priests, monks, and rabbis of mainstream denominations).

The group teaches or implies that its supposedly exalted ends justify means that members would have considered unethical before joining the group (for example: collecting money for bogus charities)."

#8 and #9 could have multiple examples easily demonstrating both of these criteria. Maybe this, and if you're looking at that and wondering how important truck driving as a job is... Yes. It is important. It's the reason why you see anything in a supermarket. It's the reason why my Dad came home (roughly a decade ago) with a ton of food that a supermarket was going to throw out because of a printing error with the packaging of the food. It's a massive industry in Texas especially, and driverless trucks are just going to be rolled out, without any consideration for how entire families could be impacted by this, or the safety of driverless trucks being on the highway.

#10 "The leadership induces guilt feelings in members in order to control them.

Members’ subservience to the group causes them to cut ties with family and friends, and to give up personal goals and activities that were of interest before joining the group.

Members are encouraged or required to live and/or socialize only with other group members."

We might be almost there, if we allow ourselves to.

An accountant in Manhattan, started using ChatGPT last year to make financial spreadsheets and to get legal advice. Then he started asking it more existential questions, which led to a mental health spiral.

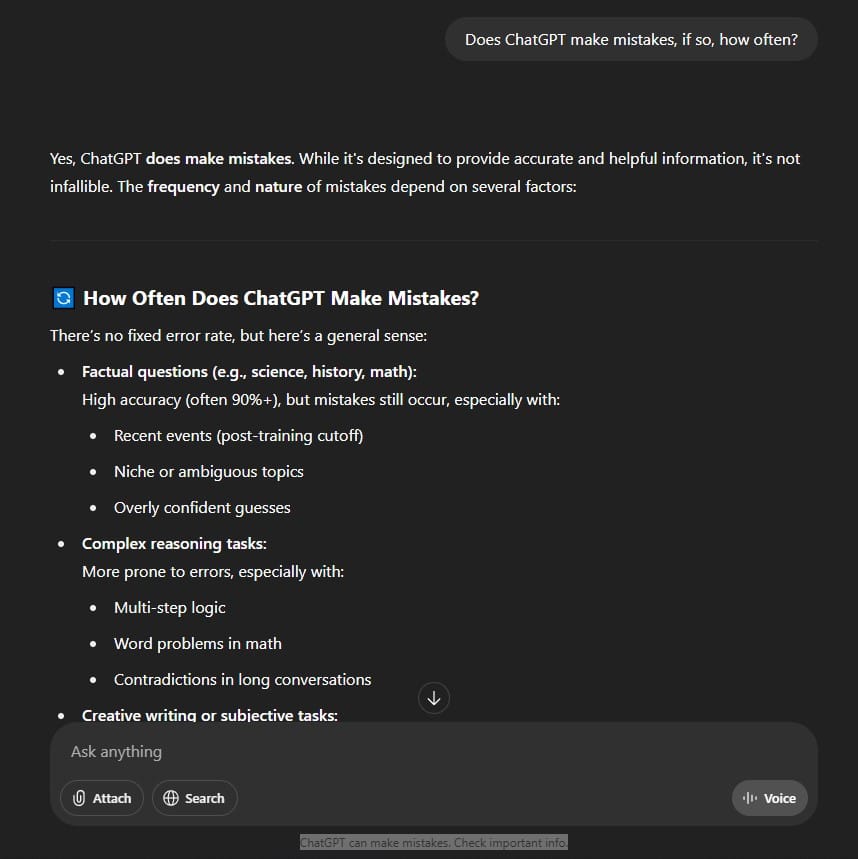

Yes, after you type in a prompt in ChatGPT, there's a small text stating that "ChatGPT can make mistakes. Check important info". That's fair, but with all the increasing encroachment into people's lives, it almost feels like the mistakes, at least should be, small. ChatGPT, according to ChatGPT, isn't right about 99% of the time, (which is what a lot of cloud services promise to stay online for), it's 90% "+"... According to itself, and I honestly wonder how accurate that 90%+ is or where it got that number.

If you were encouraged to rely on ChatGPT or some other LLM tool as something that can make your life easier, how far would you take it? How often would you "check important info"?